| |

Learn the basics of openai_ros using a Turtlebot2 simulation.

Description: This tutorial will introduce you to openai_ros by making turtlebot2 simulation learn how to navigate a simple maze.Keywords: OpenAi

Tutorial Level: BEGINNER

Next Tutorial: openai_ros/Wam-V RobotX Challenge with openai_ros

Contents

- TurtleBot 2 with openai_ros:

- The Training Script

TurtleBot 2 with openai_ros:

Let's make a Turtlebot 2 robot learn to move without colliding in a simple maze.

Step 0: Setup the Simulation

You can do it in two ways:

- In the Version 2, you dont need to download any simulation. The needed code will be downloaded in the moment you launch the task environment and the robot environment.

In order for the training script to work, you also need to download and compile the openai_ros package, because it is using an openai_ros TaskEnvironment to do the job (learn more about TaskEnvironments below).

cd /home/user/simulation_ws/src git clone https://bitbucket.org/theconstructcore/openai_ros.git cd openai_ros;git checkout version2 cd /home/user/simulation_ws;catkin_make;source devel/setup.bashOr you run the simulation and openai_ros on ROSDS using a ROSject: by clicking on the following link, it will automatically get you a copy of a ROSject on ROS Developement Studio (ROSDS) containing simulation and openai_ros setup and ready to launch the simulation and start training.ROSject (watch this video to understand what is a ROSject). This ROSject has examples of all the Tasks and Robots supported at the moment in OpenAI_ROS.

Step 1: Launch the simulation

- The Simulations will be launched automatically through the Task and Robot environment selected. The world asociated to the Task environment that will appear depends on the launch file defined in the Task envirnment. The same happens with the Robot environment.

Step 2: Let's create the Environment classes

The Training Environments

The training environments are the Python classes provided by the openai_ros package.

The Gazebo Environment

As I've said before, the Gazebo Environment is mainly used to connect the simulated environment to the Gazebo simulator.

The code for this class is inside the robot_gazebo_env.py of the openai_ros package.

You have to do nothing here. This class is already embedded in the RobotEnvironment of the Turtlebot 2.

The Robot Environment

The robot environment will then contain all the functions associated to the specific robot that you want to train. This means, it will contain all the ROS functionalities that your robot will need in order to be controlled.

In the Turtlebot 2 example, this is handled by the class TurtleBot2Env defined in the turtlebot2_env.py file of the openai_ros package.

Again, you have to do nothing here, because the openai_ros package already provides you the RobotEnvironment for the Turtlebot 2. We are going to use that class in the TaskEnvironment.

The Task Environment

The task environment class provides all the context for the task we want the robot to learn. It depends on the task and on the robot!!.

In our Turtlebot2 in the maze example, the task environment class is created in the turtlebot2_maze.py file.

The Training Script

The purpose of this training script is:

* to set up the learning algorithm that you want to use in order to make your agent learn

* select the task and robot to be used

The MOST IMPORTANT THING you need to understand from the training script, is that it is totally independent from the environments. This means that, you can change the algorithm you use to learn in the training script, without having to worry about modifying your environments structure.

Let's do an example

a) Go to your catkin_ws/src directory, and create the package called my_turtlebot2_training.

Create a Python file named start_training.py inside the src directory of the package with the following code:

Let's provide execution permission to the script:

roscd my_turtlebot2_training/scripts chmod +x start_training.py

As you can see on the Python program, you are creating the Reinforcement Learning training loop, and you are using a Qlearn RL algorithm to train.

The code of Qlearning is provided by the code of the following file:

Go to your src directory, create a file named qlearn.py and paste the code of that file link into it.

Every training has an associated configuration file with the parameters required for the task

b) Inside the package, create a new folder called config and create a new file inside it, named my_turtlebot2_maze_params.yaml. Put the following code on it

my_turtlebot2_maze_params.yaml

Parameters are divided into two different parts:

environment related parameters: those depend on the RobotEnvironment and the TaskEnvironment you have selected.

RL algorithm parameters: those depend on the RL algorithm you have selected

c) Create a launch folder. Inside the launch folder, create a new file named start_training.launch. You can copy the following contents into it:

1 <?xml version="1.0" encoding="UTF-8"?>

2 <launch>

3 <!-- This version uses the openai_ros environments -->

4 <rosparam command="load" file="$(find my_turtlebot2_training)/config/my_turtlebot2_maze_params.yaml" />

5 <!-- Launch the training system -->

6 <node pkg="my_turtlebot2_training" name="turtlebot2_maze" type="start_training.py" output="screen"/>

7 </launch>

Now you are ready to launch the training code:

$ roslaunch my_turtlebot2_training start_training.launch

You should get something like the following:

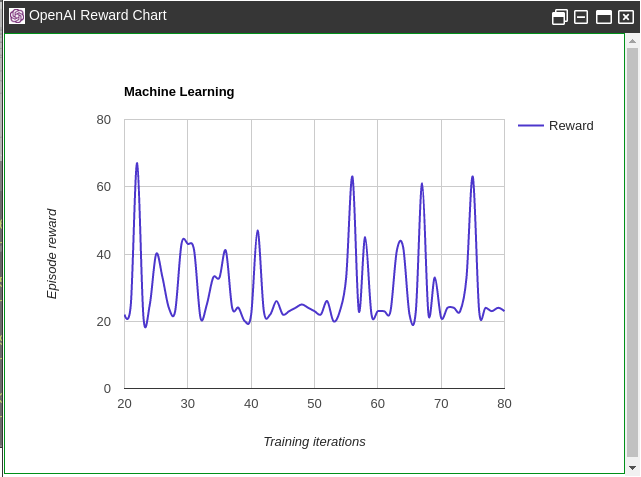

Let's also check how the reward is advancing. The openai_ros package automatically provides a topic that contains the reward obtained by each episode. The topic is called /openai/reward and you can plot it to obtain something like this:

Changing training algorithm

That structure of the training algorithm (provided by OpenAI) allows to easily change the training algorithm, without having to modify the environment or robot. That allows the easy comparison of different algorithms under the same circumstances

Exercise for home: change the Q-learn algorithm by the Sarsa algorithm (code provided in a separated file named sarsa.py), and check which one produces better results.

IMPORTANT POINT If you only want to concentrate on creating RL algorithms for training robots in already defined tasks, then you only need to concentrate on creating your learning algorithm on a Python file and then use an already existing environment. Use the start_training.py script below and modify the learning algorithm and select the environment you want. You don't need to do anythig else.

If you want to specify your own tasks or your own robots to solve the task, then you need to learn how to create Training Environments.

Create Your Own AIScript , Task or Robot Environment

Depending on what you want to do, create your own RL script, Task , or Robot Environment you will have to edit in one place or another. Lets divide it into three possible scenarios:

I want to create my own AI RL script but using an existing TaskEnvironment

This will be the most common scenario if you find that one of the already existing tasks suits your needs.

- The first step is to have a look at the great openai_examples git for inspiration on how to start:

Then Start by creating your own AI RL algorithm that uses the openai environments. Then select one of the task_environments. You can find all the list of task environments in the file : task_envs_list.py

For example, lets take the MyTurtleBot2Maze-v0.

You have to also decide where you want the system to download the necessary files for the simulations to be executed for that particular task. For example, lets take the absolute path "/home/user/simulation_ws".

Then you have to add in your yaml config file where you load the parameters for your AI script, the following two parameters to be loaded into the param server:

task_and_robot_environment_name: 'MyTurtleBot2Maze-v0' ros_ws_abspath: '/home/user/simulation_ws'

- Now you have to add the following code to your python AI RL script, before using the openai environment:

And that's it. If you execute the script and load the parameters into the param server, you the world and robot appropriate for the Task 'MyTurtleBot2Maze-v0' should be launched in gazebo.

There is this exact example here:start_training_maze_v2.launch

I want to create my own Task environment but use an existing Robot Environment

So you like the robots you find supported in openai_ros, but you want to train them to do another task.

First have a look at all the existing ones in the repository:task_env available

Then you have to create a new folder inside the 'task_envs' and inside it a folder called 'config. Inside 'config' you place the yaml file that will be loaded that has all the parameters needed for the new Task to work.

Then its time to create your own task environment file. For that you will have to create the script file here in the 'task_env/your_task/your_task.py'. Among all the structure there are two very important features to make this work: 'The world launch' and 'The Task environment registration'.

The world to launch will be done through the following lines of code placed in the 'init' of this class. Here you have a snippet on how this is done in the turtlebot2_Maze:

1 from openai_ros.task_envs.task_commons import LoadYamlFileParamsTest

2 from openai_ros.openai_ros_common import ROSLauncher

3 import os

4

5 # This is the path where the simulation files, the Task and the Robot gits will be downloaded if not there

6 # This parameter HAS to be set up in the MAIN launch of the AI RL script

7 ros_ws_abspath = rospy.get_param("/turtlebot2/ros_ws_abspath", None)

8 assert ros_ws_abspath is not None, "You forgot to set ros_ws_abspath in your yaml file of your main RL script. Set ros_ws_abspath: \'YOUR/SIM_WS/PATH\'"

9 assert os.path.exists(ros_ws_abspath), "The Simulation ROS Workspace path "+ros_ws_abspath + " DOESNT exist, execute: mkdir -p "+ros_ws_abspath + "/src;cd "+ros_ws_abspath+";catkin_make"

10 ROSLauncher(rospackage_name="gym_construct",

11 launch_file_name="start_maze_world.launch",

12 ros_ws_abspath=ros_ws_abspath)

13

14 # Load Params from the desired Yaml file

15 LoadYamlFileParamsTest(rospackage_name="openai_ros",

16 rel_path_from_package_to_file="src/openai_ros/task_envs/turtlebot2/config",

17 yaml_file_name="turtlebot2_maze.yaml")

18

19 # Here we will add any init functions prior to starting the MyRobotEnv

20 super(TurtleBot2MazeEnv, self).__init__(ros_ws_abspath)

Have you seen the 'ros_ws_abspath parameter loaded and checked that exists. That is the path you set in the AI RL script that states where all the simulation files will be downloaded if not found.

Here among other things you are 'Loading the yaml file needed' for the task, and more importantly, 'Launching the script that will start the environment needed'. 'NOT the robot', only the world. The robot will be done by the 'Robot environment'.

We have to specify from where will the simulation be downloaded. For the moment it only supports 'git embedded files'. To define this you need to edit the file openai_ros_common.py. Here is an example of how this is defined:

1 elif package_name == "iri_wam_description" or package_name == "iri_wam_gazebo" or package_name == "iri_wam_reproduce_trajectory" or package_name == "iri_wam_aff_demo":

2 package_git = ["https://bitbucket.org/theconstructcore/iri_wam.git"]

3 package_git.append("https://bitbucket.org/theconstructcore/hokuyo_model.git")

- As you can see you can place as many gits as you want to be downloaded when asked for a certain package.

Another file that has to be edited when creating a new 'Task Environment' is task_envs_list.py. Here you have to register the 'name of the task environment' that openai will use to find it and import the correct python module. Here is an example:

I want to create my own Robot Environment

Ok so you have the AI RL script, the Task but you need another robot instead. These are the steps:

First have a look at all the ones already done for you for inspiration. This is very important because some tasks call some RobotEnv methods that will have to be compatible with yours if you want to use that Task. robot_envs available

- Now create a new script for YOUR_ROBOT.py following as template the robot that resembles the most to the one used in the Task Env you want.

- Then, to launch the script that spawns the robot, you have to add these lines of code. Here is an example for the Turtlebot2 Robot:

As in the Task environment you will have to edit the openai_ros_common.py to add the package needs to launch the spawn launch ( in this example 'turtlebot_gazebo', 'put_turtlebot2_in_world.launch').

And that's it.

How to contribute?

We want OpenAI-ROS to be bigger and bigger each day, with support for more and more robots and tasks. So just 'fork' the openai_ros repo, branch version2 and place a pull request. We will process it ASAP. If for the contrary you want to create examples of Deep learning for the different tasks already available, follow the same procedure in the openai_examples_projects

Conclusion

The openai_ros package allows to clearly separate the different aspects of a Reinforcement Learning training for robots using ROS.

- If you want to concentrate on the learning algorithm for a given robot task, modify only the start_training script.

If you only want to change the robot you want to use for solving the task (same algorithm, same task), create only a new RoBotEnvironment for your robot.

If you only want to change the task that the robot has to learn (same algorithm, same robot), just create a new TaskEnvironment.

If you want to interface this to a different simulator (or the real robot), create a different GazeboEnvironment.